Equation

Forward:

1 | e = embed(x) |

Backward:

1 | dr = dl * 2 * (r-y) |

Experiment 1.

Experiment setting

- embed weights as zero

- fc weights as uniform random by default

- fc bias as uniform random by default

- use relu

Intermediate values

Forward:

1 | e = embed(x) = 0 |

Backward:

1 | dr = dl * 2 * (r-y) != 0 |

Result

The de of embed updates, db of fc updates, but dw of fc does not update.

But because this iteration update the de, so at the next iteration, the e will have value, so that dw = df * e will have value, and fc will update as normal.

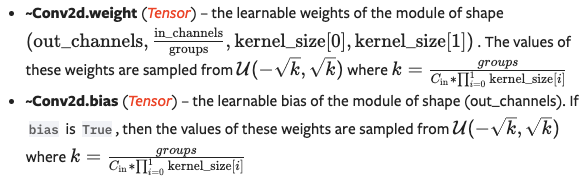

The weights of embedding updates as normal. Because the bias in Pytorch are sampled from uniform distribution by default(as the following figure). Even though the w * e is zero, the f = w * e + b still has values, then df and dx = df * w has value, then the de can update.

Experiment 2.

Experiment setting

- embed weights as zero

- fc weights as uniform random by default

- fc no bias or bias as zero

- use relu

Intermediate values

Forward:

1 | e = embed(x) = 0 |

Backward:

1 | dr = dl * 2 * (r-y) != 0 |

The de and dw both do not update.

Because f = w * e = 0, then df is zero, dw and de are zero then.

But if the gradient of relu change to:

1 | 1 if f >= 0 else 0 = 0 |

rather than:

1 | 1 if f > 0 else 0 = 0 # relu in pytorch |

The df will be 1, and de can update then.

Experiment 3.

Experiment setting

- embed weights as zero

- fc weights as uniform random by default

- fc no bias or bias as zero

- use leakyrelu

Intermediate values

Forward:

1 | e = embed(x) = 0 |

Backward:

1 | dr = dl * 2 * (r-y) != 0 |

The de, but dw does not update.

Just like mentioned above, the df will be 0.01, and de can update then.

Experiment 4.

Experiment setting

- embed weights as zero

- fc weights as zero

- fc no bias or bias as zero

- use leakyrelu

Intermediate values

Forward:

1 | e = embed(x) = 0 |

Backward:

1 | dr = dl * 2 * (r-y) != 0 |

The de and dw both do not update.

Because e and w are zero, so dw = df * w and dx = df * e are zero.

Experiment 5.

Experiment setting

- embed weights as ones

- fc weights as ones

- fc no bias or bias as zero

- use leakyrelu

Intermediate values

Forward:

1 | e = embed(x) = 1 |

Backward:

1 | dr = dl * 2 * (r-y) != 0 |

All cells in the de and dw update with the same direction.

Because e and w are same, and df cames from a single loss value.

So, in this case, no matter how many the weights there are, it can only be regarded as a single weight.

Summary

From the equation, we know:

1 | # forward |

The weight of full-connection dw is affected by the output of embedding e, and the weight of embedding of de(or dx) is affected by the weights of full-connection w.

Both of them are affected by gradient from the next layers df.

So, even if we set the weights of embedding as zeros, since its gradient is calculated from the dx = df * w and de lookuped from dx, only if we keep df * w from the next layer have values, the de(from dx) will update as normal. So please be careful about the w and graident vanish issue of the df from the next layer.